Philosophy is often about “big concepts”; concepts such as knowledge, understanding, autonomy, transparency, intelligence, and creativity. And all these concepts are at stake in the context of current research in data science and artificial intelligence. It seems inescapable that we lose some of our own autonomy once our cars start driving autonomously and our houses become smarter and smarter. Computers outsmart us in number crunching since decades, but will they also outsmart us in creativity? Will they become the “better scientists” or will there always remain a difference between “pure prediction” and “real understanding”? Is predictive success acceptable even if it comes with a loss in transparency? After all, transparency is something we are very much worried about not only in science but in all kinds of political and societal contexts. At the same time, privacy and data protection laws are a major theme in public discourse as well. Consider tracking apps, for instance—do we really want to become transparent citizens and consumers, X-rayed as it were by a machine learning algorithm no one might actually understand?

Coordinating concepts and experiences

These are only a few of the numerous well-known questions that people are currently concerned about in relation to AI and data science. What might be the role of philosophy in this context? According to A.N. Whitehead, the purpose of doing philosophy is “to coordinate the current expressions of human experience” (Whitehead 1933: 286). Of course, the use of such concepts as knowledge, transparency, creativity, and autonomy stretches across various contexts of experience—from everyday business to global politics, science and so on. Thus, philosophy is about taking these different contexts seriously and gaining a better understanding of “big concepts” by putting their different uses and connotations into a meaningful structure. Such a structure, however, cannot be fixed once and for all. This is what Whitehead’s phrasing “current expressions of human experience” emphasizes. We do not experience things the same way (and maybe not even the same things) as people did a hundred or a thousand years ago. In order to distinguish more pervasive issues from what might rather be temporary particularities, it is therefore helpful to have a look at history and at the way the current situation came about. What, for instance, is really new about contemporary data science as compared to data-based (observational) research as conducted in the past? Might “understanding” or “creativity” be good terms to characterize and distinguish a humanly pre-trained software like AlphaZero from a fully self-trained software like AlphaGo? Or is the use of these terms to be restricted to actions carried out solely by human beings—and why?

Tackling these questions is likely to reveal shifts in the meanings and implications of those “big concepts”. And it will lead to a critical and historical awareness which, in turn, will reduce the danger of becoming obsessed, panicked, or paralyzed by contingent current developments. In fact, such an awareness may even lead to a kind of toolbox, increasing one’s ability to cope with present and future obstacles (cf. Sieroka et al. 2018; also for the relation to the notion of responsibility).

Some examples

Let us assume transparency is indeed of fundamental value. But so is the saving of human life. So what about, for instance, using an “intransparent” emergency care app on my smartphone? If my concern is about whether I am having a heart attack and what to do next, I am not so much worried about the transparency of some underlying biomedical theorizing. Moreover, there might not be that much “scientific transparency” in my physician’s claim about whether I had a heart attack or not, either. Why should I then mistrust “Doctor App” on my smartphone whose diagnosis is based on the data of fifty million heart attacks, say, whereas my physician has experienced maybe fifty cases altogether? However, one might argue that this should not stop us from aiming at “transparency” in the long run. Even if “Doctor App” is currently more successful in its predictions, its effectiveness may not be sustainable or resilient if there is no understanding of the underlying physiological processes involved. That is, there is a danger of “Doctor App” relying too closely on a particular set of data, which would then hinder adaptability to new data and to a wider range of applications.

This may suffice to illustrate that many further questions arise about “transparency” in the context of knowledge acquisition, especially as it can be based on very different research agendas (theory formation vs. exploration vs. problem solving). Moreover, transparency issues are also crucial in moral contexts: presumably I do not want “Doctor App”—after it stumbled across the abnormal values in my last liver function test—to secretly order a new liver on the darknet.

This example also leads to further questions about autonomy, both regarding increased autonomy on the side of AI systems and possible losses of autonomy on the side of human beings. Consider, for instance, the kind of autonomy that I lose if, at some later stage, I live in an elderly home and am looked after by some robot? The main worry here is related to a loss in dignity and self-esteem. There might surely be contexts in which I will feel a treatment by “only a robot” as being impersonal and unworthy. But then again there might also be contexts in which I feel less embarrassed with “only a robot” witnessing my bodily deterioration.

The philosopher T.W. Adorno already scented the decline of the West and the rise of fascism when encountering automatic doors. That is, long before concerns about care robots, the simple loss of a standard door handle meant for him the loss of a “core of experience”, wresting away part of one’s individual agency in passing (or not passing) through a door (Adorno [1951] 1997: 40). Of course, it is not me who opens the door then. But to what extent is it really a relevant loss in autonomy? If autonomy is about making decisions which are not (causally or otherwise) fixed, then I am still autonomous in either passing through the door or not. Hence, it is true that a part of my action gets automatized, but one may question whether this part is really the most relevant one in terms of individual autonomy. Or, to provide a different but not unrelated example, consider autonomous driving: if my car drives autonomously, admittedly there are many decisions which I no longer make myself (when to signal, how fast to accelerate ...). However, there are many other decisions I may now make instead. I might decide about changing the radio station or about whether to look out of the left or the right window. Thus, there might be no overall loss in my autonomy, even though my car might have gained some. Maybe the space of possibilities has widened.

Here, again, the attempt to coordinate experiences and concepts is revealing: if autonomy is about previously unfixed decisions, then my car may indeed be considered “autonomous” because there are outputs (its particular acceleration, say, when entering the motorway under current traffic conditions) which may be based on implemented algorithms but which are not fully fixed beforehand. So “being autonomous” might be synonymous with “self-programming” which, in turn, may raise further questions (a) about crucial shifts that happened in computer science and the computer industry over the past few decades (cf. Gugerli 2019) and (b) about relations to experiential contexts other than road traffic and AI. For instance, the question of whether or how a notion of “autonomy” applies not only to persons but also to cars might have severe legal consequences, too.

Digital design and material cultures

So far my discussion focused on concepts (knowledge, autonomy, etc.) and thus on cognitive or mental content rather than on material objects. However, the advancement of data science and AI over the last decades also went alongside crucial changes in our material culture. Not only can we find computer hardware, smartphones, etc. nearly everywhere. There is also a huge change in what can be built—on large as well as small scales—based on machine learning algorithms, based on data-intensive 3D-printing, etc.

The keyword here is “digital design” and the reason for treating it in the present context is that material objects, too, are part and parcel of our encounter with the world around us. Thus, if philosophy is about coordinating experiences, material cultures are to be considered as well. Let me briefly mention two examples from contemporary research as an illustration.

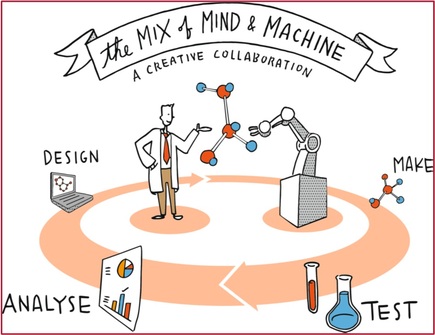

The first example is drug discovery. The advance of AI and data science continues to have a huge impact on the invention of new medicines (Schneider et al. 2019). Chemical analyses and adjustments in the compilation of active components can be automatized, and thus timeliness, which is often needed in health care, can be increased immensely. Next, there are questions of personalized medicine—something which, of course, is a very data intensive enterprise. Moreover, many of the philosophical questions already mentioned above reappear in this “materialized” context in an interesting vein. For instance, questions about creativity: what if a drug was designed (composed) by a machine learning process? How to describe the role played by the scientist who set it up initially? What about the role of the algorithm and what about the material setup which eventually did the mixture?

Whereas drug discovery provides an important example for digital design in the realm of small scales (indeed down to molecular structures), an important example for digital design involving large scales is architecture (Frazer 1995). Despite this difference in physical scaling, however, crucial questions in relation to data science and AI are strikingly similar. In particular, they once more concern the role and dynamics of personalization, automation, and materialization. For instance, there are again questions about automatized quality assurance (is this new material or new construction method really reliable?) and about personal autonomy (to what extent am I allowed to manually override the settings of my smart house?). Once more, there are efforts to reduce execution times, to use less material resources, to facilitate fabrication processes, to integrate different stakeholders on an equal footing and so on; and, once more, this raises questions about creativity and the like.

Of course, the situations in architecture and drug discovery are not fully analogous. However, it might be revealing to compare some of their underlying aims and values in the context of digital design. Design processes, it seems, are always about efficacy; that is, they aim at optimizing something. In the present case this might be drug efficacy on the one hand and maybe energy efficiency on the other. But how exactly can these types of efficacy be evaluated and set off against drawbacks such as side effects (drug discovery) and functional unsuitability (architecture)? And what exactly is the role of “the digital” in this context? Again, is it only about improvements in number crunching or is there something new and creative to it? What about even such a sweeping value as beauty? One might argue that beauty is important in architecture but not in drug discovery. But what if beauty can, at least to some extent, be broken down (and maybe “number crunched”) to structural features such as symmetries: is the preselection of molecules in drug design not based on structural features?

Given all these thought-provoking questions I am very happy that, after tackling drug discovery as RETHINK’s first challenge, we will now take architecture as our second challenge. The shift from small molecules to huge buildings will surely help to learn more about both specific and pervasive issues of modern data science and AI—and philosophy will contribute its share in “coordinating our experiences”.

References

Theodor W. Adorno: Minima Moralia: Reflections on a Damaged Life, trans. Edmund Jephcott. London: Verso 1997.<br><br>John Frazer: An Evolutionary Architecture. London: Architectural Association 1995.<br><br>David Gugerli: Digitalkolumne. Das Autonomieproblem digitaler Gesellschaften. In: Merkur. Deutsche Zeitschrift für europäisches Denken 73 (837), 2019, S. 63-71.<br><br>Petra Schneider et al.: Rethinking Drug Design in the Artificial Intelligence Era. Nature Reviews Drug Discovery 19 (5), 2020, S. 353-364 [DOI: 10.1038/s41573-019-0050-3].<br><br>Norman Sieroka, Vivianne I. Otto, and Gerd Folkers: Critical Thinking in Education and Research—Why and How? Guest Editorial, Angewandte Chemie (International Edition) 57 (51), 2018, S. 16574-16575. [DOI: 10.1002/anie.201810397].<br><br>Alfred North Whitehead: Adventures of Ideas. Cambridge: CUP 1933.